Research|Willow: High Scientific Value, but Quantum Computing Still Far from Practical Use

An Industry Expert's Perspective

Today, social media platforms were flooded with a research paper about Google's quantum computing chip, Willow, titled "Quantum Error Correction Below the Surface Code Threshold." This article sparked widespread discussion, with some radical views even predicting that Nvidia's terminal value could become zero in 20 years. While such opinions sound sensational, they are probably a bit exaggerated. As someone who has studied related fields, I specifically read this paper and my overall impression is: the scientific research value is indeed very high, but it's premature to say that quantum computing will replace Nvidia anytime soon.

Scientific Value: Willow is a Milestone in Quantum Computing

From a scientific perspective, the potential significance of this paper is indeed "very, very high." Since establishing the Quantum AI Lab in 2014, Google has been committed to pushing quantum computing from theory to practical applications. Over the past decade, they have achieved a series of breakthroughs in superconducting quantum bit hardware development, laying the experimental foundation for quantum computing. However, one of the biggest challenges in quantum computing is the noise problem: temperature, magnetic fields, and even cosmic radiation can interfere with the performance of quantum bits, leading to deviations in computational results. This is also the main technical bottleneck currently faced by quantum computing.

More importantly, in normal circumstances, as the number of quantum bits increases, the error rate also rises. However, the Willow chip has achieved a disruptive breakthrough—as the number of quantum bits increases, the logical error rate actually decreases exponentially! Google has further utilized surface codes to suppress logical errors through a series of hardware and system optimizations, such as extending the decoherence time of quantum bits, optimizing decoding algorithms (including neural networks and real-time decoders), and analyzing rare error sources. In their research, they also demonstrated how real-time decoders can achieve efficient error correction in fast cycles. Ultimately, the fault tolerance of the Willow system has been significantly improved, which can be said to be the first demonstration that quantum computing is conceptually "usable."

If we were to make a comparison, Google's series of research work from 2018 to 2024 could perhaps be seen as the "transistor invention moment" in the field of quantum computing, theoretically opening up the possibility of future industrialization. There are several very significant advancements:

Qubit lifetime has been increased from 20us to 100us.

The feasibility of error correction has been proven, with larger systems and more redundancy indeed reducing the error rate and increasing the logical qubit lifetime.

The effectiveness of tunable couplers has been demonstrated, directly understood as improving qubit yield. Even if not good when manufactured, they can be tuned to perform better.

Using the above advancements, a larger and deeper random circuit sampling task was performed, which was 10^25 times faster than the classical best case; however, this task itself has no application.

A Long Way to Practicality

However, there is always a long way to go between scientific breakthroughs and practical applications. Although this research validates the feasibility of fault-tolerant quantum computing, it will still take many years or even decades of technological accumulation and hardware optimization to truly achieve practicality. For example, the processor in this study has only 105 quantum bits, while realizing many practical applications requires thousands or even millions of quantum bits. Moreover, the task demonstrated in this paper still belongs to the "quantum entry-level" problem (such as Sampling), which is quite different from practical problems (such as factoring).

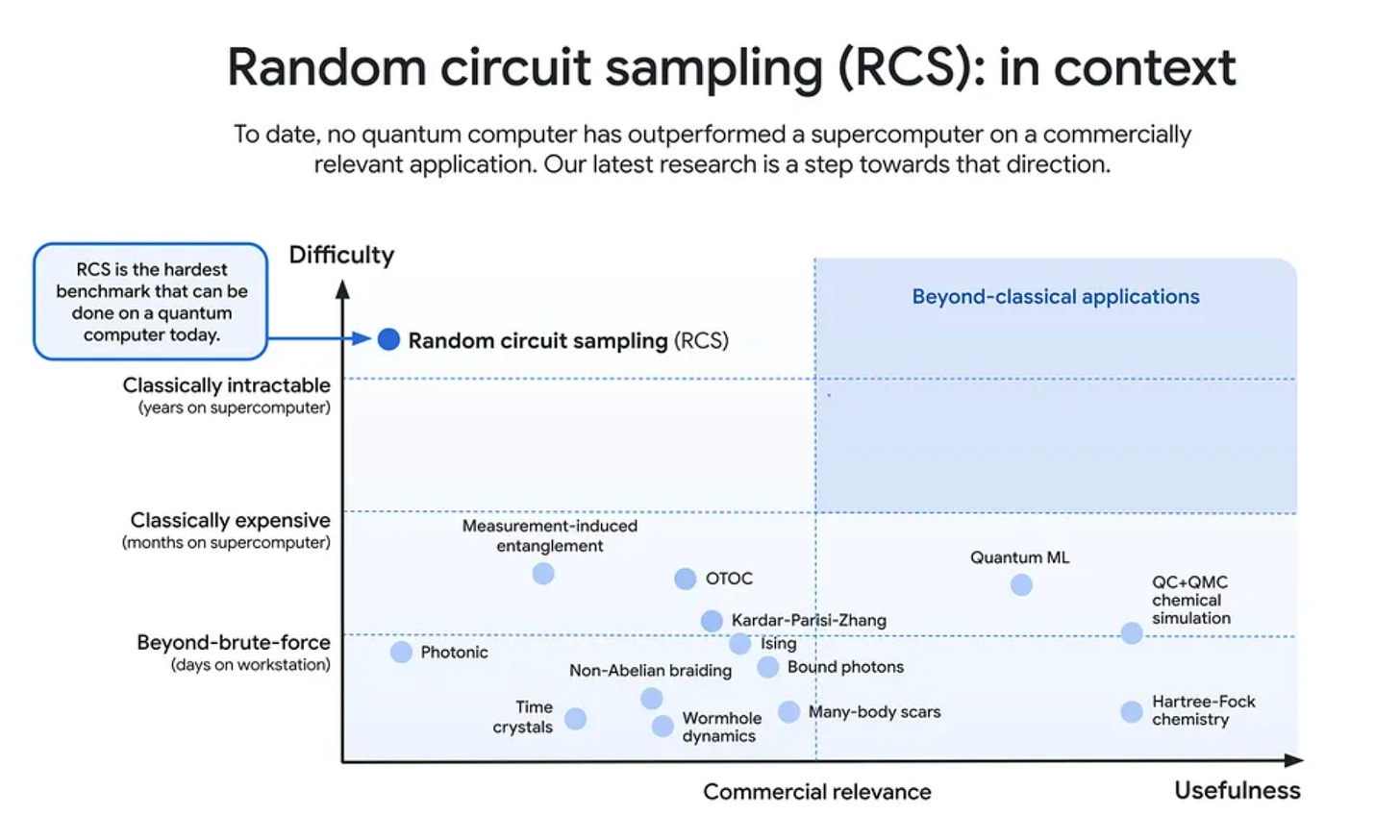

In fact, the paper itself illustrates this point through the following diagram that there is currently no commercial value, and then points out future directions.

From an optimistic perspective, people studying quantum computing often joke that large-scale mass production of quantum computing is still 50 years away, but now the 49-year countdown may have finally begun.

Nvidia's Potential Role

Keep reading with a 7-day free trial

Subscribe to FundaAI to keep reading this post and get 7 days of free access to the full post archives.